Von Neumann’s idea of self-replicating automata describes machines that can reproduce themselves given a blueprint and a suitable environment. I’m exploring a concept that tries to apply this idea to AI in a modern context:

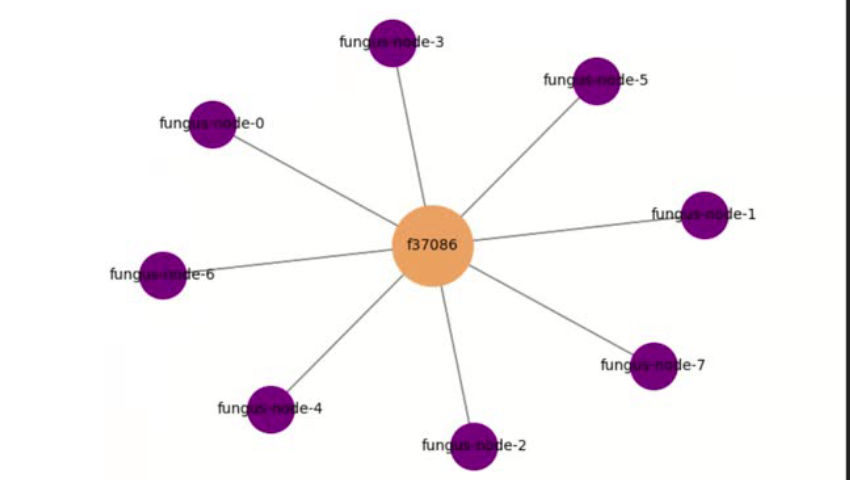

- AI agents (or “fungus nodes”) that run on federated servers

- They communicate via ActivityPub (used in Mastodon and the Fediverse)

- Each node can train models locally, then merge or share models with others

- Knowledge and behavior are stored in RDF graphs + code (acting like a blueprint)

- Agents evolve via co-training and mutation, they can switch learning groups and also chose to defederate different parts of the network

This creates something like a digital ecosystem of AI agents, growing across the social web; with nodes being able to freely train their models, which indirectly results in shared models moving across the network in comparison to siloed models of current federated learning.

My question: Is this kind of architecture - blending self-replicating AI agents, federated learning, and social protocols like ActivityPub - feasible and scalable in practice? Or are there fundamental barriers (technical, theoretical, or social) that would limit it?

I started to realize this using an architecture with four micro-services for each node (frontend, backend, knowledge graph using fuseki jena and activitypub-communicator); however, it brings my local laptop to its limits even with 8 nodes.

The question could also be stated differently: how much compute would be necessary to trigger non trivial behaviours that can generate some value to sustain the overall system?

Thanks :)