So, this whole thing kicked off because I hit a wall with local storage - it just doesn’t grow with you forever, you know? Plus, putting all my eggs in the basket of other companies felt a bit risky with all the changing rules and government access stuff these days.

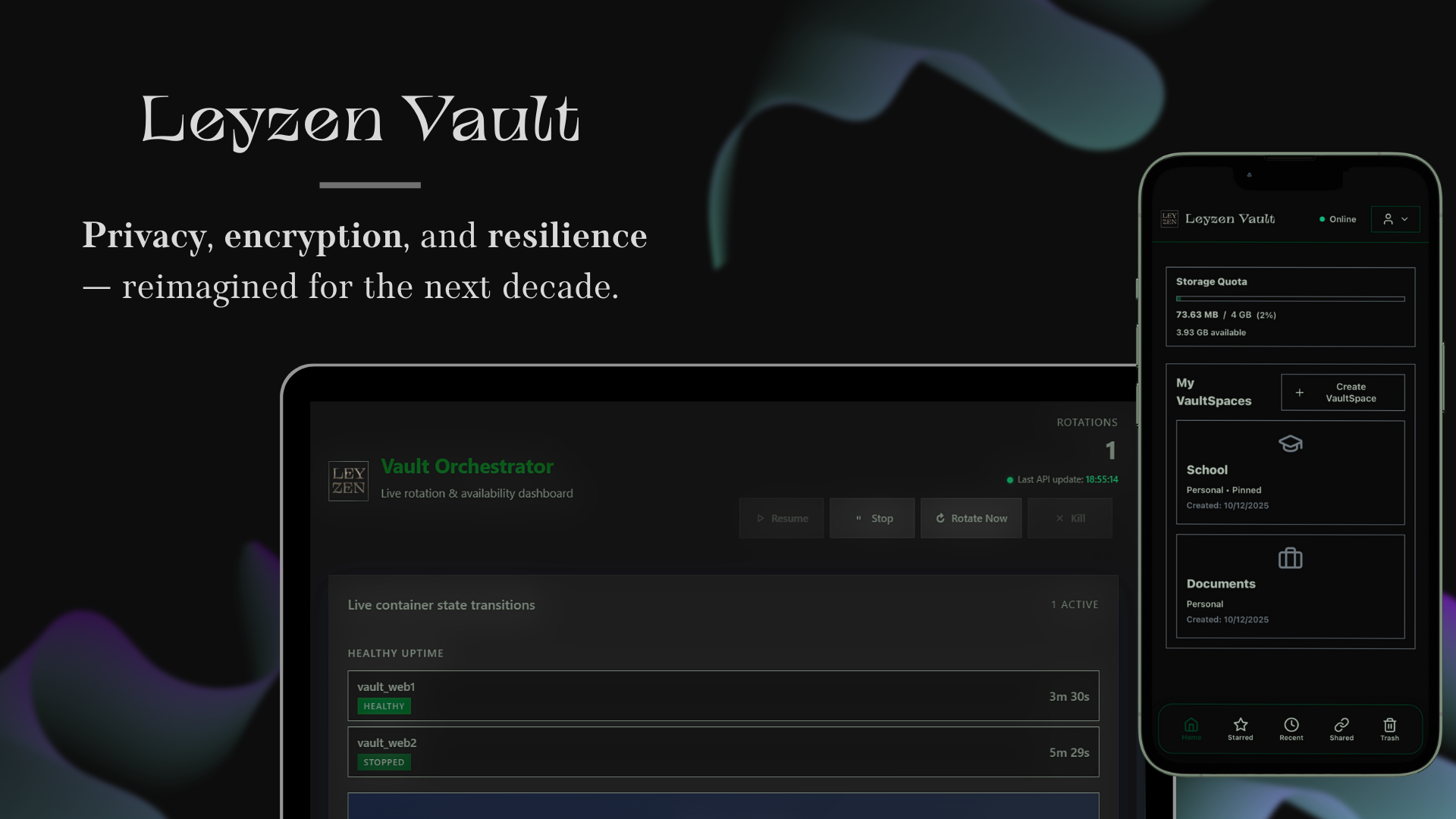

What I ended up with is pretty cool: a personal file vault where I’m in charge. It treats any outside storage like it can’t be trusted, and all the encryption happens right on my computer. I can even use cloud storage like S3 if I need to, but I never lose control of my own data.

Honestly, it just kinda grew on its own; I never set out to build a product. I’m mainly sharing it here to see how other folks deal with these kinds of choices.

You can check it out at https://www.leyzen.com/

I dont want to dump on anyone, but this is v2.4.0 and v1.0.0 was last month…

With 4 tickets…

I’m also unsure that refering to zero knowledge architecture is the correct phrase here. Instead I think it should be zero trust in this context.

But as other repos are French, perhaps the AI is from translations.

In short - a good idea, but needs time - and clearer explanations of what’s going on

Hey, thanks for the honest feedback, I really appreciate you taking the time to share your thoughts.

Yeah, v1 was pretty rough, I won’t lie. It not even worked on a clean install. I was just starting to mess with GitHub back then, so my early work lacked proper tests, workflows, and a good release plan. That’s totally on me.

I rushed v2 out because I didn’t want to keep building on shaky ground. Since then, I’ve really focused on making things stable: adding pre-commit checks, setting up CI workflows, and testing installs on fresh VMs so i know it actually works for other people, not just on my pc.

You’re also right about the words I’m using. Zero trust fits way better than zero knowledge (I literally translated from french words 😅), and I need to be much clearer and more exact about that in the docs.

Regarding issues, I’m still hoping more people will check it out and give feedback. But honestly, I’m always happy to chat and answer questions when they come up, that’s exactly what I’m hoping to get more of.

Is this project vibe coded?

Nah, not really. I mostly use AI for the annoying stuff like GitHub workflows, install scripts, and boilerplate code, not the actual backend or frontend code.

Oh, and since I’m French, I also use it to clean up my notes into good English for the README (in response to Jokulhlaups). It’s just a handy tool to speed things up, not some magic button that builds everything with one command. If you look at the commit history, you can see the project grew over time. Definitely didn’t just pop out of a single prompt, haha.

100%

Readme is most definitely. Didn’t look at the code tho

Majority of the code is python and vue? And, what problem is this trying to solve? What does it do that other solutions don’t? I read through the repo docs and it’s just not clear. WTF does it mean “I don’t lose control” over storage on cloud storage?! If it’s on the cloud, you are not in control. May the downvotes rain down on this.

Also, now that I’ve re-read this (I didnt understand what downvotes mean at first): why does a new project that doesn’t compete with big companies deserve downvotes? I’m just trying to meet tech people and talk about it, that’s all. It doesn’t need money, it doesn’t hurt anyone, and I’m not posting bullshit.

If it doesn’t solve a problem for you yet, that’s fine, it will get better over time. I genuinely want to understand what made you comment like this. And since you’re a moderator, respect btw, but why push people toward hating on it? What’s the goal here, should I delete the repo?

Good questions!

I just stick with Python, Go and Vue because I know them pretty well. I’d rather use a few tools I’m good at than try to use a bunch of different ones. It just makes my code tidier and easier for me to keep up with.

About the problem: the idea is to let you host your own stuff even if your computer isn’t super powerful. You can use storage from other companies, but you don’t have to trust them with your private data. Your files get encrypted on your computer before they even leave it. So, the storage company only ever sees scrambled data, never your actual stuff.

When I say not losing control, I’m talking about you being the only one who can read your data, not where the files are actually stored.

Oh, and yep, I do use AI tools, mainly Copilot. It helps me work faster on things like github actions, the

install.shscript etc. I don’t really see a reason to hide that.Thanks a lot for taking a look, even if it’s not totally up your alley!

kinda smells like truenas with its datasets

Can you explain the “rotating containers back end”? I’m trying to understand what that adds to security.

Here’s a simple way to look at it: it’s all about persistence. If someone sneaks a backdoor onto a server or inside a container, that backdoor usually needs the environment to stay put.

But with containers that are always changing, that persistence gets cut off. We log the bad stuff, the old container gets shut down, and a brand new one pops up. Your service keeps running smoothly for folks, but whatever the attacker put there vanishes with the old container.

It’s not about saying hacks won’t ever happen but making it way tougher for those hacks to stick around for long :)

That is not an answer.

Here’s a simple way to look at it

I’m not looking for a simple way to look at it. I want a technical breakdown of why rebuilding back end instances is valuable in a security context.

- When do the rebuilds occur? Are they triggered by some event?

- what happens to session tokens?

- do you have frontend / backend auth? What happens to that?

- are you rotating secrets as well? Compromised back end would imply your secrets can no longer be trusted.

- is data encrypted in massive blobs, or can one request only blocks of data?

- can the app tune storage requirements depending on S3 configuration?

I’ll be blunt with you: your answers to this and others have been very surface-level and scant on technical details, which gives a strong impression that you don’t actually know how this thing works.

You are responsible for your output. If you want chatgpt or github ai tools to help you, that’s fine, but you still need to understand how the whole thing works.

You are making something “secure”, you need to be able to explain how that security works.

nobody here asked for technical details, so I didn’t respond with technical stuff. but now that you ask, I can respond:

-

the rebuild occurs periodically. you set the period (in seconds) in the .env. a container named orchestrator stops and rebuilds vault containers by deleting every file that is not in the database and therefore not encrypted (like payloads). for event-based triggers, I haven’t implemented specific ones yet, but I plan to.

-

session tokens are stored encrypted in the database, so when a vault container is rebuilt, sessions remain intact thanks to postgres.

-

same as 2: auth tokens are stored in the database and are never lost, even when the whole stack is rebuilt.

-

yes, but not everything. since one container (the orchestrator) needs access to the host’s docker socket, I don’t mount the socket directly. instead, I use a separate container with an allowlist to prevent the orchestrator from shutting down services like postgres. this container is authenticated with a token. I do rotate this token, and it is derived from a secret_key stored in the .env, regenerated each time using argon2id with random parameters. and i also use docker network to isolate containers that doesn’t need to communicate between each other, like vault containers and the “docker socket guardian” container.

-

every item has its own blob: one blob per file. for folders, I use a hierarchical tree in the database. each file has a parent id pointing to its folder, and files at the root have no parent id.

-

can the app tune storage requirements depending on S3 configuration? not yet, S3 integration is a new feature, but I’ve added your idea to my personal roadmap. thanks.

and I understand perfectly why you’re asking this. No hate at all, I like feedback like this because it helps me improve.

Thanks for the reply.

-

“military-grade”

I was hoping for an answer. I think OP is saying that the backends are not persistent. So each time data is written or accessed, the backend may be dynamically created or modified. Guessing in lieu of OP’s response. However, the OP’s account is 6 hours old so…

What’s the benifit of E2EE file storage on a self hosted server? I use FileBrowser Quantum, and it’s super nice, as I can access the same shared drives that I access at home.

Hey there! That’s a great question.

So, when you’re just using something by yourself on your own computer, E2EE doesn’t always make a huge difference. You really start to see its value when you bring in outside storage, like S3, or when you have a bunch of people using it.

Think about a company running its own app. If someone uploads sensitive files and doesn’t want the system administrator or the tech team to read them, E2EE comes to the rescue. The files get scrambled before they even leave the user’s device. So, even if the server is in-house, the admin only sees encrypted stuff.

It’s basically about separating who operates the infrastructure from who can actually read the data, which lets people use shared or external storage and knowing their stuff is private.

Defense in depth for one. It looks like this project is made for protecting your data on cloud storage. I’ve noticed right now there seems to be a lot of projects around using relatively cheap S3 storage solutions.

Ahh, that makes sense. When I think of self hosting, I think of using your own hardware.

Saying something is “self hosted” when it’s actually hosted by a cloud provider is sort of like saying something was “self coded” when it was actually coded by an LLM.

Self hosting can be stretched to mean you’re hosting your own services on a cloud provider.

Yeah, I have dabbled in that with streaming to multiple platforms via a VPS. It definitely is stretching the definition of self hosting.

I’ve been using a VPS for a while now. I still maintain it, so it’s very much like self hosting.

Yeah, it’s almost exactly the same in terms of software maintence, but of course you don’t actually host anything. I like the idea that, if the WWW goes down, I’ll still be able to control my smart home (locally of course) and such. Using a VPS is like semi-self hosting. I can’t really think of a good term for it, so I can see why it’s still grouped into self hosting.

Yeah, I only put specific things there. Management and monitoring things. The services are still local.