I find this particular argument strange:

“Dude had suicidal thoughts/machinations long before he used Chatgtp”

So… You’re saying that this person was in crisis for awhile, but managed to not kill himself UNTIL he used your service which helped him plan it?

How … how is that a reasonable argument?

I remember when this happened, I think i commented something like “While chatgpt enabled this behavior and these sorts of things will continue to happen, the parents needed to be more attentive especially to his internet behavior, this is on them just as much.”

I still feel that way, but damn, this is such an idiotic take from openai. Chatgpt as far as im aware is still the biggest llm app, and it still has these issues.

“Its the boys fault for killing himself.” What a truly powerful take from the world’s dumbest company that enabled the act of suicide. Morons.

I don’t know why you guys are complaining about falling into the mechanical looms, the rules clearly say to not do that.

If a teen can bypass safety measures on your software that easily then they don’t work 🤷♂️

Erm… actually, teenagers bypass security measures better than most adults.

‘That easily’ = having a conversation with it while suicidal, not trying to break it.

There is no wrong way to use it considering they want everyone hooked on it. So it’s their fault. Under ZERO circumstances would I ever say an LLM company is ever in the clear for their LLM guiding people towards killing themselves, no matter what they claim.

”Holding your phone wrong” moment?

More like gun, and yes

You people are bat shit insane.

AI is as dangerous as guns!!! 🤣🤣🤣🤣🤣👌👍

For mental health? Yes, a robot that says “you’re absolutely right” to every prompt is as dangerous as a loaded gun.

Yes, a robot that says “you’re absolutely right” to every prompt

Worse than that, a robot that can construct conversations so convincingly that people are misled into thinking it is a genuine fellow intelligence and is being sold to us as a miraculous technological panacea that can solve all problems and says “you’re absolutely right” to every prompt. It’s the combination of apparent legitimacy and relentless utter bullshit that makes it so dangerous. Either one on its own wouldn’t be so bad. People are good at disregarding bullshit as long as they can determine that it might be bullshit. However when they’ve been convinced the source is an authority, and the authority is telling them bullshit and relentlessly leading onwards into further bullshit, they will accept it without further question. We see this happen in cults, and many other places in our society, but never on this scale.

AI is an illusion, but it’s a very convincing one. Any snake oil salesman would be jealous of what a good show it puts on.

👌👍🤣 this shit some of you are willing to type in public is wild.

And they call AI a soulless machine…

God made man in his own image, something something…

Oh, that’s not a lawsuit in the making…Ugh, I can’t wait for AI to lose all its staunch supporters.

It’s not even real ai either, just generative machine learning that outputs what we think we like based on a prompt. No prompt, no response, not intelligent

Yeah, which is what really grinds my gears; people let these techbros get away with shaping the narrative about their dressed up LLMs that aren’t ever going to be intelligent. LLMs themselves can be useful for stuff, but they will never be a swiss army knife that can be used for anything. True AI, is going to be the product of proper research and investment of effort from multiple disciplines; neuroscience, psychology, and other tech fields will need to work together to manifest something that is artificially intelligent.

Real AI was invented in the 70s and it’s called expert systems

it is AI, just not “AGI” or whatever word that implies it’s extremely capable of everything. We even call those algorithms that play chess “AI”.

Oh, that’s not a lawsuit in the making…

The article is talking about one of the ongoing lawsuits against the company.

My mistake, commenting on the internet while tipsy, is…A choice. I forgot to read this article.

I’m sure then it should be placed under the mature section of the app store, after proper age verification and proper and repeated warnings about how this app can get you killed. I’m sure it will be great for their business.

“AI” is an exercise in ignoring Mary Shelley’s warning.

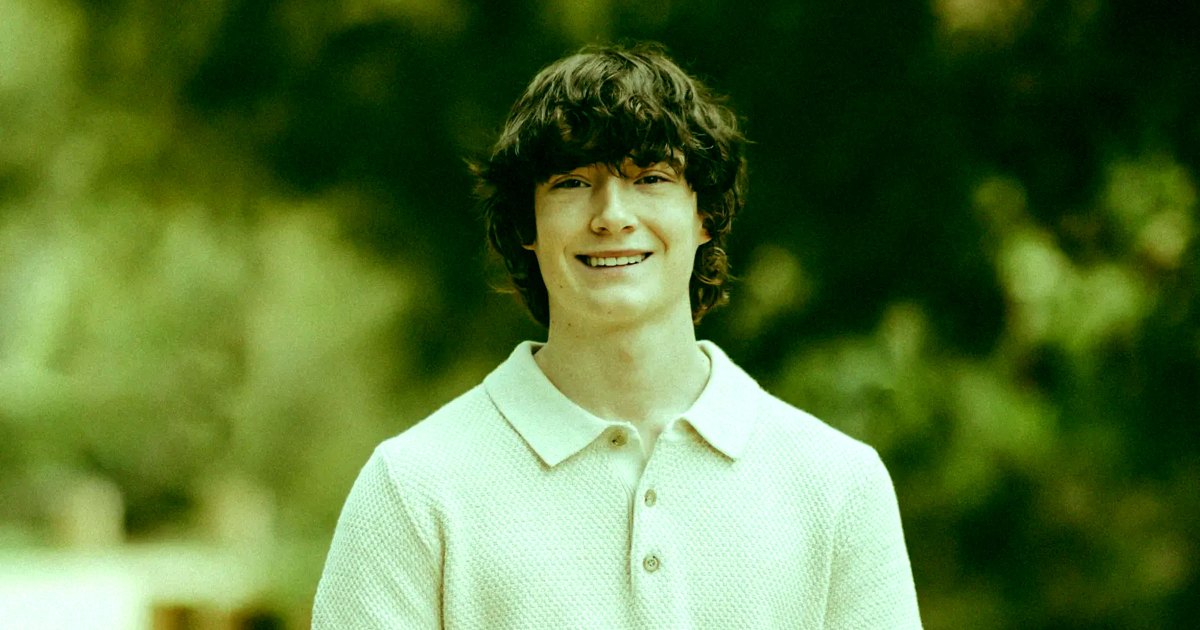

That poor kid. My heart goes out to his family.

Looks like we can thin our own herd now