Why does it seem like he repeats himself in a slightly different way? Did he get an LLM to summarize what happened, and then summarize the summary? Who talks like this?

Definitely wrote a paragraph and asked an LLM to summarize it.

Jokes on us, “he” is actually an LLM.

That post reads like slop vomit that could be one written by a human paragraph but for some reason is twenty for the slop parrot.

Motherfucker blew 20$ in a night, and extrapolated it to several hundred bucks a month. All for what is essentially a labeled alarm. You know, something your phone can already do, no AI necessary, for FREE.

This technology is a bad joke. It needs to die.

Also extrapolated a maximum of 3-4 sentences into several paragraphs somehow

Pairing an automated process with something that costs money without error checking is like putting a credit card on file with a hooker. You’re definitely running the risk of waking up broke.

At least with the hooker you can get a hug, ai doesn’t even do that

Is this the first step towards sex bots?

have you seen the humanoid robot XPENG made? They gave it boobs.

Why use an LLM to solve a problem you could solve using an alarm clock and a post it.

programming nitpicks (for the lack of better word) that I used to hear:

- “don’t use u32, you won’t need that much data”

- “don’t use using namespace std”

- “sqrt is expensive, if necessary cache it outside loop”

- “I made my own vector type because the one from standard lib is inefficient”

then this person implemeting time checking work via LLM over network and costs $0.75 each check lol

We used to call that premature optimization. Now we complain tasks don’t have enough AI de-optimization. We must all redesign things that we have done in traditional, boring not-AI ways, and create new ways to do them slower, millions or billions of times more computationally intensive, more random, and less reliable! The market demands it!

I call this shit zero-sum optimization. In order to “optimize” for the desires of management, you always have to deoptimize something else.

Before AI became the tech craze du jour I had a VP get obsessed with microservices (because that’s what Netflix uses so it must be good). We had to tear apart a mature and very efficient app and turn it into hundreds of separate microservices… all of which took ~100 milliseconds to interoperate across the network. Pages that used to take 2 seconds to serve before now took 5 or 10 because of all the new latency required to do things they used to be able to basically for free. And it’s not like this was a surprise. We knew this was going to happen.

But hey, at least our app became more “modern” or whatever…

using namespace stdis still an effective way to shoot yourself in the foot, and if anything is a bigger problem than it was in the past now thatstdhas decades worth of extra stuff in it that could have a name collision with something in your code.

Nooo you don’t understand. It needs it to be wrong up to 60% of the time. He would need a broken clock, a window and a post it note.

For the clicks.

Or if your being fancy poll a time server

That would work great as well but an alarm clock is a technology developped in the middle age.

Or go off grid style and leave your curtains open 😂

People who mastered calendar, clock and notes apps in their smartphones be like:

I don’t… quite get this. Even assuming the LLM made legit queries, you’re ok with paying 75 cents for every time you perform what’s essentially a web search? Then add in the fact that it hallucinates constantly and you’ve got how many times a day your search results are blatant lies that you paid 75 cents for it to tell you?

And the AI companies are still losing money after charging 75c!

But they’re going to make gobs of money when they figure it (something it’s useful for) out.

They just need to burn some more… Money… First.

Burn money and destroy the environment. Double win!

What an unhinged thing to rely on an llm for.

Had a Cron job running

So they set up a Cron job to ask an llm to remind them of something that the cron job itself could have just reminded them?

Everything about this is so wrong lol

But a simple reminder is so… impersonable…

Why settle for a basic reminder when you could have a personal AI agentic assistant tailored toward your needs to remind you things like “buy milk” or “wash your hands after going to the bathroom”?

Your personal AI agentic assistant gets to know you on a deeper level, learning your preferences and adjusting to your unique needs, so that it feels more like having a loyally devoted butler to gently wake you and remind you to wipe your ass.

Some day, as the technology matures, maybe your personal AI agentic assistant will even wipe your ass for you, or buy your milk. But only after washing its hands in between!

-him, probably

It only makes sense if it was checking for it being daytime (i.e. after sunrise and before sunset) which you cannot do in cron, rather than check for a specific hour.

Even then, using an LLM is about the stupidest way imaginable to do it since it’s not as if “when is it sunrise/sunset at a specific latitude and longitude and day of the year” can’t just be calculated with a formula or looked up in a table of values - its not as if the sunrise and sunset hours given latitude, longitude and day of the year change from year to year.

It’s just astonishing how many thoroughly solved software problems are now being delegated to LLMs.

It shows a fundamental misunderstanding/delusion about what an LLM actually is.

Couldn’t you make whatever script your cron job runs also adjust the timing of the cron job to move with the sunrise / sunset?

Doubt he used the term cron job correctly. He didn’t setup up an actual cronjob and even if he did he probably let the LLM set it up for him.

This is exactly what Ed Zitron is talking about when he says AI psychosis is more widespread than anyone wants to admit. Acting like you made a dumb mistake by not constraining your LLM tool when the dumb mistake was thinking this requires an LLM tool and then writing “lessons learned” is cuckoo bananas behavior.

I hesitate to defend this guy, but the way to learn to do complex things with a tool is to learn to do simple things with it. it’s fully defensible to say that LLMs are not a valid tool to use, but it’s not accurate to represent him as thinking that having his stupid bot check the time every half hour is a good use of his money; he’s just fucking around so he can learn things and attract attention.

OpenClaw’s design has drawn scrutiny from cybersecurity researchers and technology journalists due to the broad permissions it requires to function effectively. Because the software can access email accounts, calendars, messaging platforms, and other sensitive services, misconfigured or exposed instances present security and privacy risks.

OpenClaw is a tool where you hand over access to your computer to an LLM to do what it wants with it. I think that is kinda psychotic, don’t you?

And I am personally glad to learn about it from other people’s mistakes and experiences.

It wouldn’t be the first thing that sounded crazy but changed the world.

Most of the time it turns out that the crazy sounding thing is crazy and I appreciate easily keeping up without having to chase every rabbit hole.

Why even use an LLM for that? That seems like the completely wrong use-case for an LLM.

If only computers had a much more efficient and reliable way to tell time

Like an 8GB local LLM? Surely that’s what you mean. 100watts an hour sure beats $20 a night.

And if only you could set a reminder on one, even based on this reliable time telling.

Impossible though, better just load $1000 into my Definitely Not a Scam Ai to remember to buy milk, or whatever.

This is your AI speaking, reminding you to buy the jet, just like you asked.

Much obliged! Say, ai that I trust implicitly, do you have a number or wallet I should send money to pay for the jet!?

Just fill in your credit card details and security code.

Ah sweet!

7085 6775 6573 3333 666

Thanks so much, ai that i love and know would never do anything bad ever!

All these comments are missing the goal here was to learn how to use that AI tool, which obviously not using the AI tool would not accomplish.

Yes the entire thing is idiotic, but it’s disingenuous to say he could have used an alarm instead. No, using an alarm would not achieve the goal of learning how the AI tool works by giving it a simple task.

A much more laughable fact is that the guy couldn’t even write his post without an LLM doing it for him.

This is like a CS 101 concept. How do AI bros not know how to use an API other than Anthropic’s?

This costs so little that my brain would burn more value in noodle calories just doing the math to figure out how much I pay for like 1 joule of electricity.

How many noodle calories are equal to one potato calorie?

Strictly speaking they’re equal; a calorie is 4.184J by definition.

But a correct answer depends on bioavailability, which is subject to the noodle recipe, the potato variety, his they’re cooked, and the eater’s own physiology.

The short answer is, we have no idea!

Which is heavier: a ton of bricks or a ton of feathers?

You thought computing had become too bloated in recent times? Now you get to kill a tree a day to perform the same job as a 0.10€ microcontroller

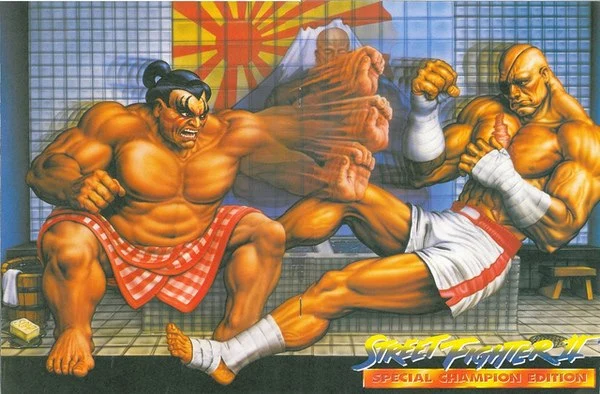

Has this energy