I couldn’t keep up to that. It really teared my attention apart.

This is why I can’t buy dram

AI has achieved the intelligence of the average voter

I’m convinced this is why people are so seemingly impressed with AI. It’s smarter than the average person because the average person is that ignorant. To these people these things are ungodly smart and because of the puffery they don’t feel talked down to which increases their perception of it’s intelligence; it tells them how smart and clever they are in a way no sentient entity would ever do.

Yes. Remember that these things have been largely designed and funded by companies who make their money from advertising and marketing. The purpose of advertising and marketing is to convince people of something, whether that thing is actually true or not. They are experts at it, and now they have created software designed to convince people of things, whether it is actually true or not. Then they took all the combined might of their marketing and advertising expertise and infrastructure, including the AI software itself, and set it to the task of convincing people that AI is good and is going to change the world.

And everyone was convinced. Whether it is actually true or not.

Lmao

Hey don’t insult the average voter like that. This post demonstrates that ai has achieved the dementia level of Trump.

Now ask it if 1/3 is smaller than 1/4.

A third pound burger for the same price as a quarter pounder? Hell yeah count me in! (~1980’s me)

Instead of advertising it as “a third,” maybe they should’ve dumbed it down a bit:

“More meat, better value!”

But according to the article you linked, these third pound burgers are still available at A&W after all these years. So at least their failed marketing campaign didn’t destroy them.

Obviously its 2012 again!

2013 never happened. We just keep repeating 2012 over and over to see if we can make the world end this time around.

Side note: that’s not a Mayan calendar.

Es tenochtitlstlepekalpanamixtlatxiuatl. Te vas derecho diez cuadras y luego a la derecha junto a la ferreteria Damian Gonzales y Pavon de la Huerta Porfirio Ybarra. Preguntas por Inez, es la que esta requetetlalpan. Ya nomas tras lomita de hay se ve que de hay era pues.

Lo que no me cae son los taparrabos.

So when one uses AI on Ecosia, are they helping to plant a tree or burn one? Perhaps it’s a toss-up.

±0

Task unsuccessfully failed successfully.

Something, something “leap years”. Well that explains it.

That answer was wrong. So therefore, that answer was correct. No. Yes. Maybe.

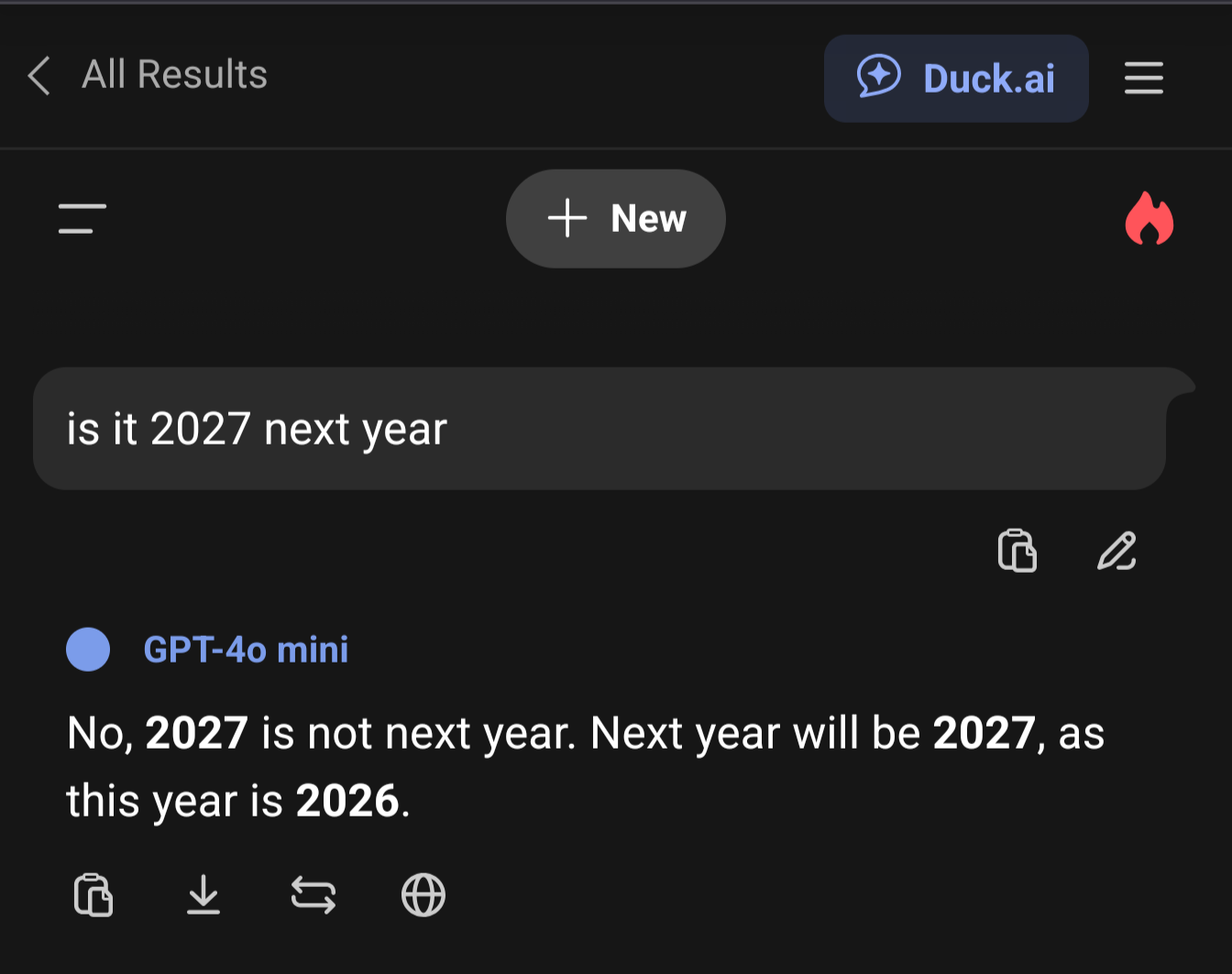

It’s pretty obvious how this happened.

All the data it has been trained on said “next year is 2026” and “2027 is two years from now” and now that it is 2026 it doesn’t actually change the training data. It doesn’t know what year it is, it only knows how to regurgitate answers it was already trained on.

nah, training data is not why it answered this (otherwise it would have training data from many different years, way more than of 2025)

There’s data weights for recency, so after a certain point “next year is 2026” will stop being weighted over “next year is 2027”

It’s early in the year, so that threshold wasn’t crossed yet.

Maybe it uses the most recent date in the dataset for its reference to datetime?

I wish I could forcibly download that understanding into the brains of everyone freaking out about AI enslaving humanity.

It also happened last year if you asked if 2026 was next year, and that was at the end of last year, not beginning

This instance actually seems more like ‘context rot’, I suspect google is just shoving everything into the context window cuz their engineering team likes to brag about 10m tokens windows, but the reality is that its preeeeettty bad when you throw too much stuff.

I would expect even very small (4b params or less) models would get this question correct

This is actually the reason why it will never actually become general AI. Because they’re not training it with logic they’re training it with gobbledy goop from the internet.

It can’t understand logic anyway. It can only regurgitate its training material. No amount of training will make an LLM sapient.

Based on what?

Math, physics, the fundamental programming limitations of LLMs in general. If we’re ever gonna actually develop an AGI, it’ll come about along a completely different pathway than LLMs and algorithmic generative “AI”.

Based on what LLMs are. They predict token (usually word) probability. They can’t think, they can’t understand, they can’t question things. If you ask one for a seahorse emoji, it has a seizure instead of just telling you that no such emoji exists.

deleted by creator

This isn’t just the machine being ignorant or wrong.

This is a level of artificial stupidity that is downright eldritch and incomprehensible

Maga math

Yeah, this “sequence guesser” is definitely something we should have do all the coding, and control the entire Internet…

Yeah, “AI” is just statistical analysis. There’s more data in it’s database that indicates the 2027 is not year and only a few days worth of data that indicates that it is. Since there’s more data indicating 2027 is not next year, it chooses that as the correct answer.

LLMs are a useful tool if you know what it is, it’s strengths and weaknesses. But it’s not intelligent and doesn’t understand how things work. But if you have some fuzzy data you want analyzed and validate the results, it can save some time to get to a starting point. It’s kinda like wikipedia in a way, you get to a starting point faster, but have to take things with a grain of salt and put some effort make sure things are accurate.

Google pulled the AI overview from the search, but “is it 2027 next year ai overview” was a suggested search because this screenshot is making the rounds. The AI overview now has all the discussion of this error in it’s data set and mentions it in it’s reply but still fails.

Ha!

It’s amazing how it works with like every LLM

I’m guessing they’re still stuck on thinking it’s 2025 somehow, which is pretty crazy since keeping accurate track of dates and numbers SHOULD be the most basic thing an AI can do.

AI doesn’t “know” anything. It’s a big statistical probability model that predicts words based on context. It is very specifically BAD at math and dates etc. because it works based on words, not numbers. Models can’t do math

Yeah, I’m being colloquial with saying “thinking,” and that makes sense! Computers that are bad at math, what genius!

Actually, it’s what traditional software should be very good at, LLM is actually inherently kind of bad at it. Much work has gone into getting the LLMs to detect mathy stuff and try to shuffle off to some other technology that can cope with it.

Good point!

The issue is context rot, try again with gpt oss or llama scout (both of them don’t have internet search option), they will answer correctly i just checked

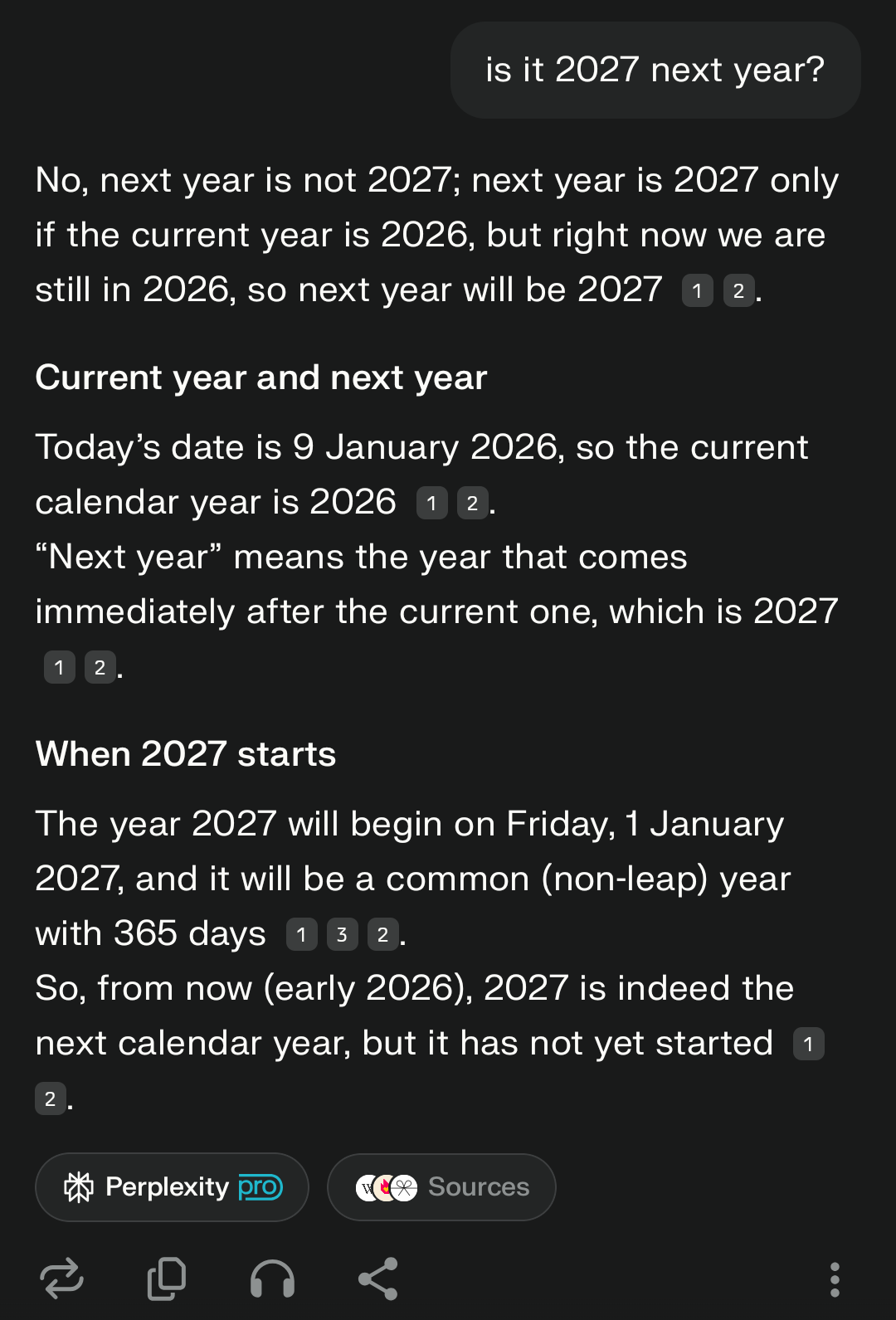

Copilot was interesting: Is it 2027 next year? Not quite! Next year will be 2026 + 1 = 2027, but since we’re currently in 2026, the next year is 2027 only after this year ends. So yes—2027 is next year, starting on January 1, 2027.

I recently found this (it can be replicated with many values):

Talking to AI is like talking to an 8-year-old. Has just enough information to be confidently wrong about everything.

An eight year old that takes everything literally.

I love how overexplained this wrong answer is

Well, that’s part of it, broadly speaking they want to generate more content in the hopes that it will latch on to something correct, which is of course hilarious when it’s confidentally incorrect. But for example: Is it 2027 next year?

Not quite! Next year will be 2026 + 1 = 2027, but since we’re currently in 2026, the next year is 2027 only after this year ends. So yes—2027 is next year

Here it got it wrong, based on training, then generated what would be a sensible math problem, sent it off to get calculated, then made words around the mathy stuff, then the words that followed are the probabilistic follow up for generating a number that matches the number in the question.

So it got it wrong, and in the process of generating more words to explain the wrong answer, it ends up correcting itself (without ever realizing it screwed up, because that discontinuity is not really something it trained on). This is also the basis of ‘reasoning chains’, generate more text and then only present the last bit, because in the process of generating more text it has a chance of rerolling things correctly.

This is worth at least 500 trillion dollars!

We have a virtual parrot, it’s not “intelligence” in any way. So many suckers